Setting new standards for financial confidence with AI

I led the design for the Financial Test Suite and Agent, defining a co-creation canvas pattern that has become the standard in Workday for financial agentic experiences. This interface moves beyond the ubiquitous chat interaction by merging conversational AI with familiar financial grids. It addresses the core friction of financial AI: users want natural language to initiate workflows, but require dense, structured grids to monitor them. This hybrid model allows professionals to generate, verify, and edit data at speed and scale.

The impact

Currently in Early Access (EA), initial deployment indicates an 84% reduction in manual testing time.

Following its announcement and demo at Workday Rising, a record 61 customers, including Netflix and Salesforce, expressed interest in joining our design partner group to develop this product.

Early feedback has been highly positive, with one customer stating that the ability to configure custom tests is "an absolute game changer".

The initial content map I fed into Google Gemini to generate realistic data

Rising pressure

The ask started small: create some quick visuals for a leadership meeting of what continuous, automated financial integrity could look like. Within days we were full steam ahead and given a 7-week deadline to launch at Workday Rising.

The core problem is massive. The current methods of verifying financial data are manual and prone to error. As one customer described, at month end accounting teams were "working till 1:00 a.m. essentially eye-balling data" to verify routine transactions.

The compressed schedule forced a difficult trade-off - we had to bypass deep discovery. To mitigate this, I utilised Gemini as a synthetic research partner, generating detailed Procure-to-Pay test scenarios to validate with Product Managers daily. This allowed us to leapfrog weeks of domain immersion and deliver a credible hero use case.

Two of the three initial screens delivered to leadership for buy-in

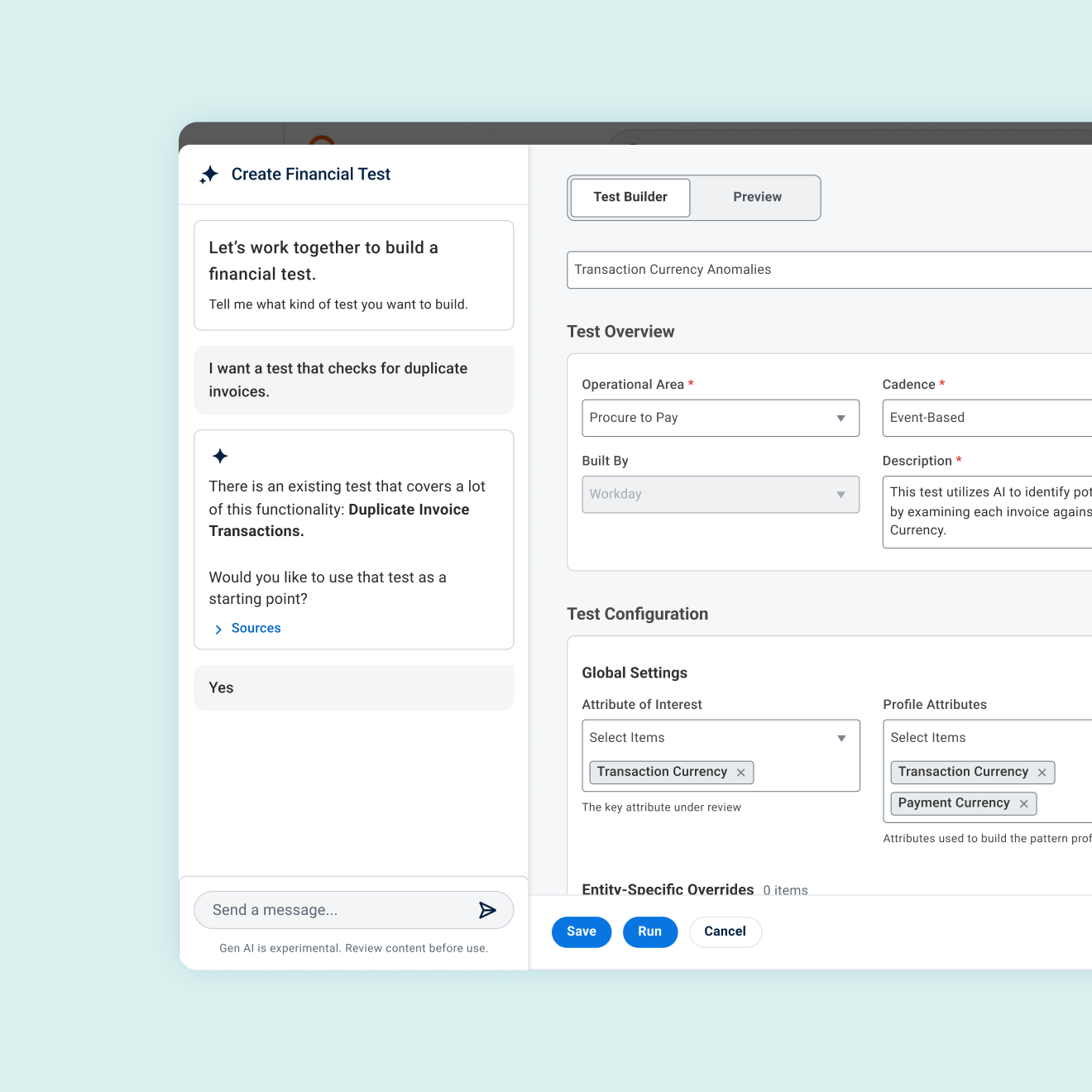

The canvas interface showing the chat on the left and the structured test grid on the right

Defining the co-creation pattern

While the path of least resistance was to adopt the standard "Ask Workday" chat interface, I believed that highly structured and sequenced processes (as most financial use cases are) would better suit a hybrid approach. Financial users live in tables and grids; they need to see the work to certify it. This led me to design a split-screen co-creation canvas that acts as a "Cursor for Finance."

In this model, the left pane handles conversational intent, such as requesting a scan for duplicate invoices, while the right pane generates the structured output. Crucially, the Agent produces a fully configured, editable Workday Grid rather than a text block, bridging the gap between novel generative AI and trusted, familiar UI paradigms.

Designing for trust

In the context of financial records, an AI hallucination is a potential liability. We deliberately shifted the design philosophy from automation to augmentation to preserve human oversight. When the Agent flags an anomaly, such as a duplicate payment, the invoices are always on hand to allow the human to audit the AI’s logic instantly. Furthermore, the system is designed never to auto-correct. It prepares the remediation artifact, such as a suspension request, but strictly requires the human professional to apply the final stamp of approval.

Proactive and easy to consume remediation actions

Beyond the demo

The rapid sprint to Rising meant strictly prioritising the "happy path", forcing us to sideline deeper design explorations. During the conference week - typically a period for teams to rest and reset - I utilised the downtime to revisit sidelined ideas and push the boundaries of the concept.

I upskilled in Lovable, prototyping capabilities like "Intelligent Action Surfacing". This explored moving beyond simple alerts to generative remediation, where the system automatically constructs remediation workflows for user approval. Post-conference, these prototypes were valuable inputs for the product roadmap and influenced subsequent engineering spikes by the AI team.

Remediation workflow in Lovable

Intelligent action surfacing in Lovable

The structured and editable co-creation canvas

Learnings

Familiarity builds trust

In the consumer space, chat is king. However financial professionals think in grids, ledgers, and immutable records. To drive adoption, we had to anchor the AI experience in structured, editable artifacts. The co-creation canvas proved that the most effective AI interface isn't one that mimics a human conversation, but one that respects the user's existing mental model.

Friction is necessary in high stakes environments

Seamless automation terrifies a CFO who is personally liable for the results. We had to design positive friction to force a moment of human judgment. By applying the accounting world’s “Preparer & Reviewer” model we could leverage a familiar workflow when applying friction - the Agent acts as the preparer, scanning thousands of lines to flag anomalies and draft the remediation artifact, while the user acts as the reviewer. This ensures every automated action is defensible to an auditor.